Blog

Human interaction is based on a language, on a context, on a world knowledge that we share. As a Voice AI company, we know that emotion is the key factor. Emotional expression gets us moving, creates movement and a collective response. It is a key factor in society. It is the basis for all the decisions we make. In creating a virtual reality, new dimensions and augmented experiences, this key factor cannot be missing.

2021 has been an exciting year for our researches working on the recognition of emotions from speech. Benefiting from the recent advances in transformer-based architectures, we have for the first time built models that predict valence with a similar high precision as arousal.

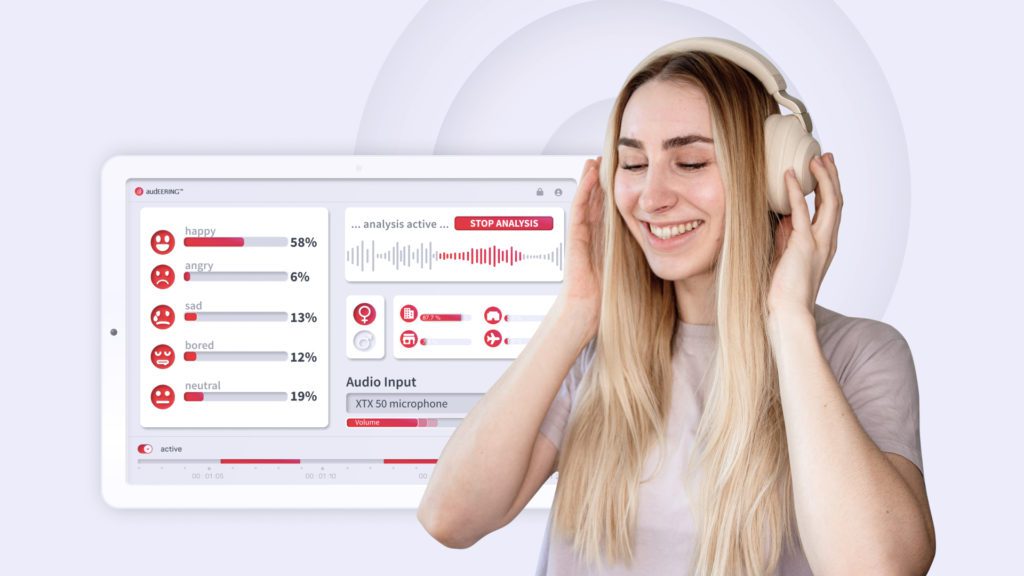

We are proudly announcing a new class of next-gen emotion models coming to devAIce with our latest 3.4.0 release of devAIce TM SDK/Web API.

Developing AI technology as we do at audEERING, we need to understand our human perception. Everyday perception is enabling us to realize the emotional state of our communication partner in different situations. In the process of Human Machine Learning we need to give the algorithm essential input. How do we at audEERING create AI?

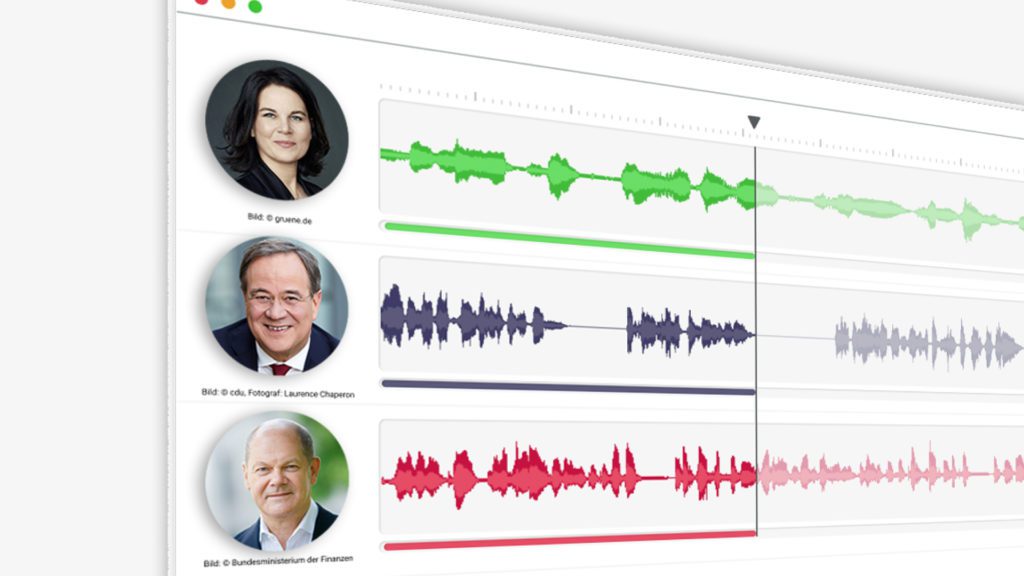

The german politicians Annalena Baerbock, Armin Laschet and Olaf Scholz had been analyzed by audEERING’s Audio AI while their chancellor candidation. audEERING's analyzes do not refer to the content of the speeches, but to acoustic, linguistic and emotional characteristics. These are identified by using scientific procedures and audEERING's award-winning AI technology devAIce ™.

Just like last year, we are proud that we got the chance to present our ideas and be part of the devcom Developer Conference 2021. We talked in a panel discussion about the entertAIn brand. If you missed the talk, watch it on our Youtube Channel.

The recognition and perception of emotional output is an essential part of human communication. To develop the socio-emotional communication skills of autistic children, therapy has to focus on that. In the ERIK project a new form of therapy is being developed.

The EU research project EASIER aims to design, develop and validate a complete multilingual machine translation system to serve as a framework for accessible communication for deaf and hearing people.

Young developers from all over the world used audEERING’s emotion detection to create their own game. Have a look and enjoy the new way of gaming.

This year, I took a more practical approach and presented our vision for the future to you: solid case studies behind each one of them.

The human interaction between a doctor and his or her patient, is one of the most important processes when it comes to the diagnosis and treatment of any disease.