Robots Learn Empathy

audEERING and Hanson Robotics develop robots with the highest social competence for everyday use. One of the world’s leading robot manufacturers Hanson Robotics integrates automated emotion recognition devAIce from German AI company audEERING. Highest level of human-machine interaction opens up new chances for the use of robots on the job, for example in caregiving. Robots recognize the emotions of their counterparts by voice and increase their social competence as a result.

devAIce® SDK 3.6.1 Update

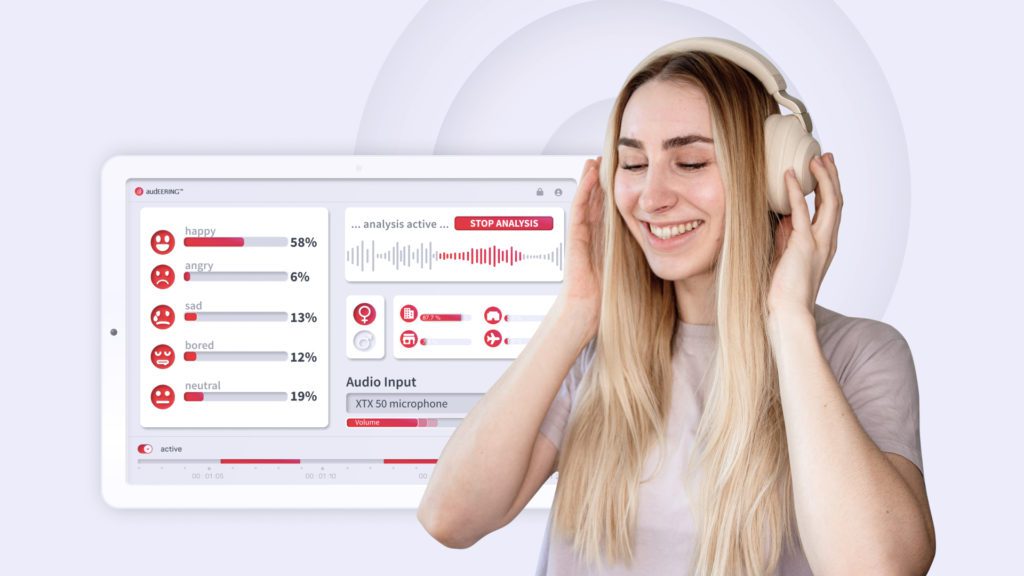

The devAIce® team is proud to announce the availability of devAIce SDK 3.6.1 which comes with a number of major enhancements, exciting new functionality and smaller fixes since the last publicly announced version, 3.4.0. This blog post summarizes the most important changes that have been introduced in devAIce® SDK since then.

SHIFT – Cultural Heritage transformation project kicks off under Horizon Europe

SHIFT: MetamorphoSis of cultural Heritage Into augmented hypermedia assets For enhanced accessibiliTy and inclusion supports the adoption of digital transformation strategies and the uptake of tools within the creative and cultural industries (CCI), where progress has been lagging.

audEERING is now participating in the SHIFT project with the goal of synthesizing emotional speech descriptions of historical exhibits. This will change the way one experiences a historical monument, especially for visually impaired people.

Affective Avatars & The Voice AI Solution

We use avatars to show our identity or assume other identities, and want to make sure we express ourselves the way we want. The key factor in expression is emotion. Without recognizing emotions, we have no way of modifying a player’s avatar to express their expression and individuality.

With entertAIn play, recognizing emotion becomes possible.

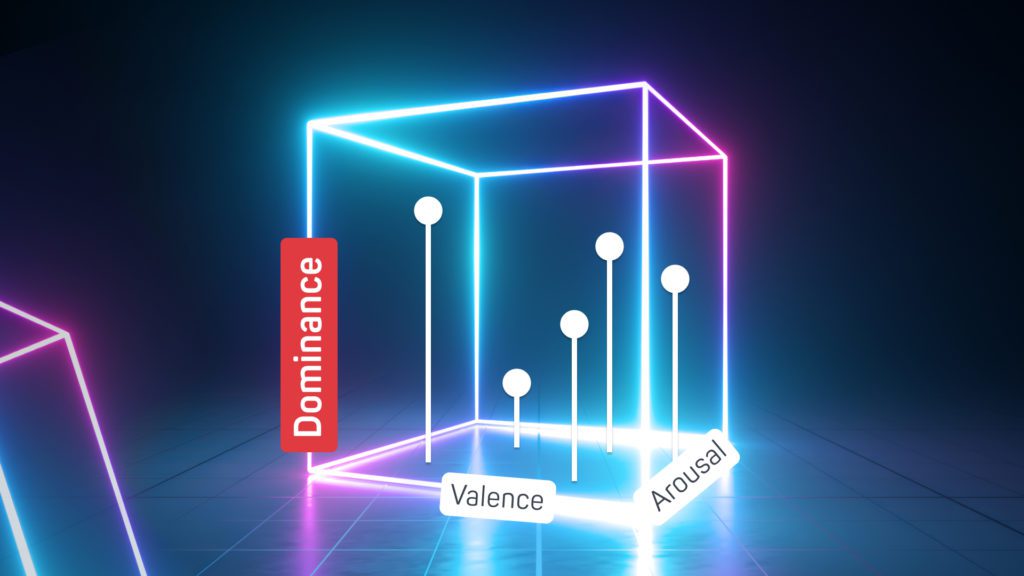

Closing the Valence Gap in Emotion Recognition

2021 has been an exciting year for our researches working on the recognition of emotions from speech. Benefiting from the recent advances in transformer-based architectures, we have for the first time built models that predict valence with a similar high precision as arousal.

New Updates on audEERING’s Core Technology: devAIce SDK/Web API 3.4.0

We are proudly announcing a new class of next-gen emotion models coming to devAIce with our latest 3.4.0 release of devAIce TM SDK/Web API.

ERIK – Emotion Recognition for Autism Therapy

The recognition and perception of emotional output is an essential part of human communication. To develop the socio-emotional communication skills of autistic children, therapy has to focus on that. In the ERIK project a new form of therapy is being developed.

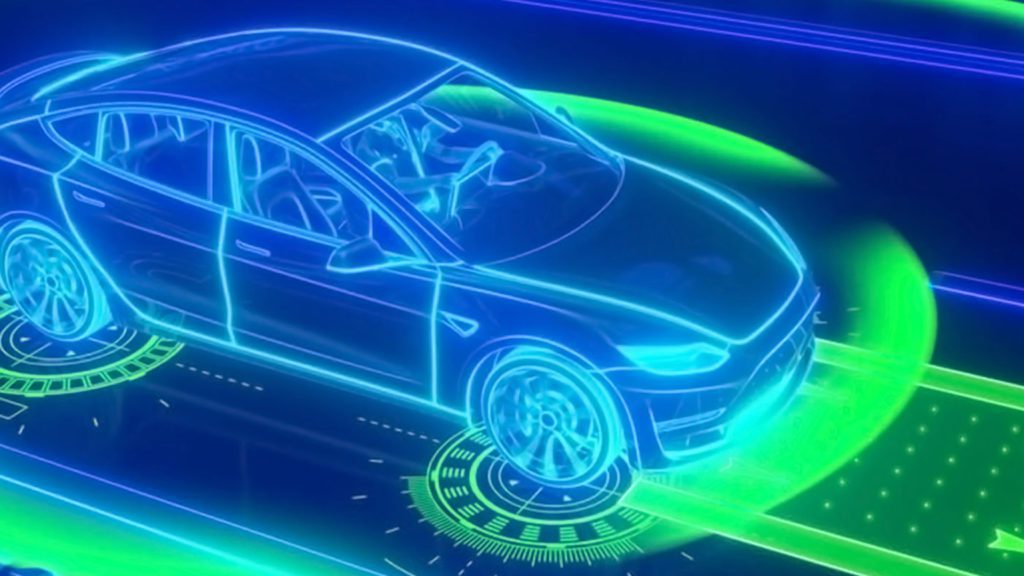

SEMULIN: Audio AI for Autonomous Cars

The market for autonomous driving is projected to grow from 5,7 billion in 2018 to 60 billion US-Dollars in 2030. This shows the potential this technology will have in the future and is one of the reason why the German government provided funding for project SEMULIN.

Integrating Emotions into Your Game

If you have been following our blog posts, you know that we explained the importance of emotions and their role in our daily interactions (link to the first blog). Then, we focused on the human-computer interaction in the context of video games (link to the second blog post), and finally, we explained how emotions can be the missing layer for human-computer interaction (link to the third blog post). This week, we will see how integrating this emotional layer into your products can benefit you and as a result, makes the world a better place.

Emotional Interaction – The Missing Link

In our last blog posts, we explained the role of emotions in video games, had an overview of the human-computer interaction and what it meant for the video game industry. We found out that from a technical perspective we have reached almost full freedom in the 3D environment. For example, CAVEs can give you the freedom to navigate naturally in a virtual environment in real-time.

What AI has to say about: Game of Thrones: Battle of Winterfell

Well, I think you can imagine what happened next. We placed microphones in the conference room and recorded the audience throughout the screening. Then we passed the acquired data to ExamAIner which is a web application using the emotion recognition audEERING technology in the backend.