Makes you feel comfortable and safe.

The Natural Reader in Automotive

The natural voice changer

Changes in your vocal expression

Hundreds of muscles are needed to produce voice and speech. Therefore, the smallest physical changes in tone and articulation become noticeable. This enables the acoustic analysis of vocal expression.

With voice analysis technology, it becomes easy to harness the potential of the human voice.

- vocal expression detection based on tone of voice

- The tone of voice based on valence, arousal, and dominance

- Individual voice analysis based on speaker attributes

Real-time voice interpreter

Even faster than 1 second

Natural and effective interaction between humans and cars is achieved with Voice AI. It enables real-time feedback for vocal expression in just 1 second.

The future of automotive is becoming empathetically competent. Drivers can benefit from various functions when interacting with their car:

- Improved customer experience

- Voice-based setting options

- No video recordings needed

- No data storage required

- High data security

“Don’t touch turbo boost! Something tells me you shouldn’t turbo boost.”

KITT, the voice of Knight Industry 2000’s Micro processor, K.I.T.T. , from Knight Rider series.

Human-car interaction

Based on voice and context

audEERING’s Audio AI solution goes beyond voice.

In everyday communication, the context makes the difference. As part of a general intelligent dialog system, audEERING’s core technology devAIce® opens up a completely new way of interaction.

Future human-car experiences will make the car an empathic companion. Through detection and understanding, interaction can be improved to achieve greater satisfaction and a safer driving experience for everyone in and out of the vehicle.

By loading the video, you agree to YouTube's privacy policy.

Learn more

Vocal expression is relevant to significantly change the driver’s experience in the future.

Functionalities concerning safety aspects can be optimized based on the analysis of the driver’s voice. Negative expressions increase risk while driving. Taking this into account decisively improves safety for all road users.

Affective driving is responsible for more than 56% of traffic accidents.

Simultaneously, based on the driver’s positive expressions and preferences, the smart car is able to make suggestions from real-time analysis that create comfort and improve well-being on the road.

80% of all drivers drive angry or frustrated. The risk of an accident is 9.8 times higher for affective drivers.

Multiple application cases

Natural interaction with the voice assistant is possible in several application cases.

Vocal expression tracked over time.

This enables the detection of phases of high and low emotional energy. Viewed in context, this allows particularly stressful and depressive phases of the driver to be analyzed.

The Mood Diary can be used independently by the person driving and serve as a basis for decision-making in certain situations:

- Route planning – especially for long and stressful journeys

- Decisions about driving assistance – physical and technical

- Seeking professional help in the case of strongly negative phases

Voice analysis for anger management:

Anger tempts people to drive too fast because this expression increases risk-taking. An angry expression leads to faster speech, more precise articulation, and fewer pauses. Resulting in quasi-audible driving behaviour. Our solution addresses this by:

- Proposing breaks

- Playing certain music selection – storage in Mood Diary to verifiably test the calming effect on the driver

- Adapting car voice to driver‘s voice (shown to reduce accidents: Nass et. al, 2005)

- Authentic feedback to sensitizes and create empathy towards other drivers (Roidl et. al, 2014)

Kids on the backseat:

When children get loud, the risk of driver distraction and cognitive overload is increased. To control this, the voice assistant:

- Offers automatic entertainment program for backseat screens

- Proposes childrens favourite music or radio play

- Engages children in games, voice chat with avatars, etc.

Significant changes in the user’s voice can be tracked through interactions with voice assistants. Voice analysis can be used for health monitoring to identify vocal biomarkers.

Possible vehicle actions:

- Suggestion to call doctor for appointment or check-up

- Emergency calls – hospital, doctor or emergency contact

- Emergency stop & warnings in autonomous driving,

Identification of dangerous situations in the car:

- Detection of alcohol intoxication of driver or side effects of strong medication

- Automatic detection of violence and aggression by drivers and passengers- car sharing, taxis, etc.

Possible vehicle actions:

- Autonomous driving, emergency stop & warnings for other drivers

- Silent alarm to police or taxi operator in case of violent passengers

Multi-model sleepiness detection enhanced by audio:

- Non-speech sounds: yawning, snoring

- Analyzed speech from voice prompts and telephone calls

Possible action points:

- Suggestion to take a break at nearby restaurants

- Changing climate settings, playing sounds & selecting upbeat music

- Autonomous driving, emergency stop & warnings for other drivers

Enhancing driver attention control systems with audio data :

General detection of cognitive load for better stress management.

- Detects if driver is distracted by a conversation over the phone or with passengers

- Measuring stress or high cognitive load in speech (irregular speech rhythm, pauses, repetitions, hesitations, high arousal, etc.)

- Detecting sound events that might indicate or cause driver stress (children crying, objects falling, etc.)

Possible vehicle actions:

- Adaptation of the voice assistant interactions (simplifying conversation, lowering speaking rate, pausing or auto-answering phone calls)

- Autonomous driving, emergency stop & warnings

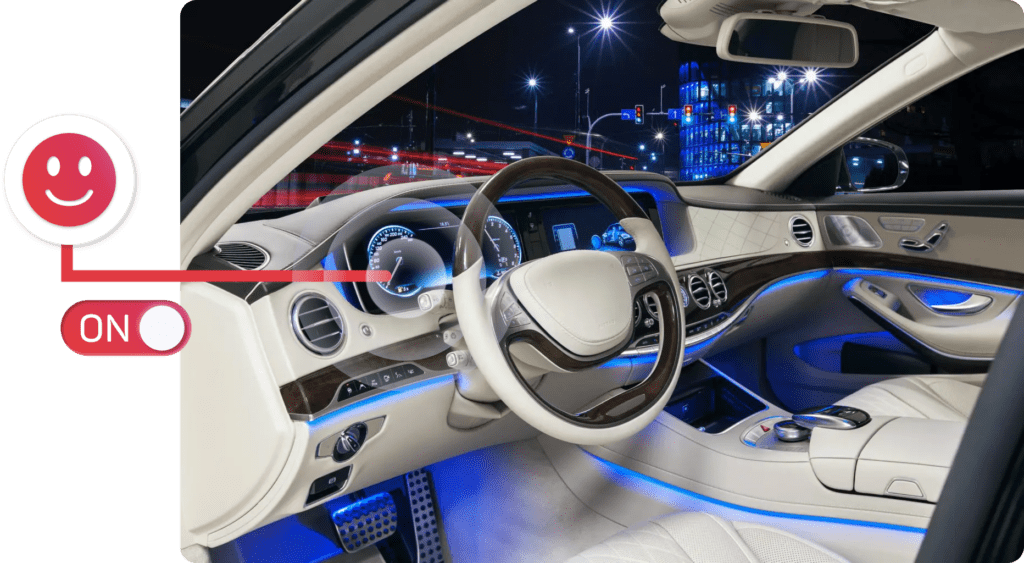

Customized mood settingsFor a comfortable ride

Depending on the current mood, the car can be given a customized interior. Various functionalities of a modern voice assistant in the car can be adapted to the results of the voice analysis.

To match the driver’s expression, the interior mood effects are adapted through:

- Mood-based interior light

- Motor sound

- Seat comfort

- Temperature

Entertainment settingsNatural communication & humor

Modern voice assistants already use humorous content – so-called Easter Eggs – for easy human-machine interaction.

Entertainment suggestions based on mood, speaker ID, and interactive experiences between driver and vehicle lead to natural interaction. Information about the driver’s mood and tracked preferences can be shared through connected devices:

- Auto volume, pausing, etc. based on the driver‘s mood

- Music selection based on mood

- Entertainment selection for backseats

devAIce®

Compatible with the dashboard

devAIce®, the core technology of audEERING® is available as Web API, SDK, and as devAIce® XR plugin for Unity and Unreal. This allows you to realize a wide range of application possibilities.

With devAIce® on the dashboard, you get state-of-the-art technology:

- Machine Learning with deep learning to train deep neural networks through unsupervised learning

- 7,000+ interpretable voice parameters measurable

- Highly accurate AI, trained on million datapoints

- Light-weight code, low resource consumption

Test drives in the metaverse

Voice AI plug-in for XR

The devAIce® XR plug-in for Unity and Unreal creates the future of test driving. With voice analysis, this will be possible in the metaverse. You can get deep insights into your driver’s experience.

It’s easy to implement in your virtual reality. Expressions matter, so let them matter in the virtual world. You can choose from the different features available in our core technology devAIce®.

With the plug-in vocal expression analysis and speaker attributes become part of your virtual reality.

Contact us.

Contact audEERING® now to schedule a demo or discuss how our products and solutions can benefit your organization.