What’s new in devAIce SDK 3.6.1

The devAIce team is proud to announce the availability of devAIce SDK 3.6.1 which comes with a number of major enhancements, exciting new functionality and smaller fixes since the last publicly announced version, 3.4.0. This blog post summarizes the most important changes that have been introduced in devAIce SDK since then.

Emotion model updates

- New emotion dimension “Dominance”

The Arousal-Valence model is by far the most common approach to represent and describe human emotions in terms of emotion dimensions, i.e. as points on a hypothetical emotion plane. In this model, emotions are identified by two numbers: one that measures the amount of arousal (activation/energy) attributed with an emotion and one that measures valence (positivity/negativity). Considering that in this model, emotions are described by just a pair of numbers, it can already distinguish surprisingly well between the plethora of human emotions encountered in the wild. Nevertheless, it fails to capture the nuances of certain related emotions that fall on the same spot on the arousal-valence plane but have utterly different characteristics. For instance, emotion categories “anger” and “fear” can be both attributed with high levels of arousal and negative valence but are very different in their nature. In order to be able to distinguish between these emotions, a third dimension, often termed “Dominance”, is needed. This dimension indicates whether an emotion is “dominant”, i.e. the subject is in control of a situation, or submissive, i.e. the subject lacks control. In the case of “anger” and “fear”, the former would be attributed with high dominance because the subject is in control, and the latter with low dominance because fear is often felt due to lack of control.

In the latest releases of devAIce, we added support for the Dominance dimension in the dimensional emotion models of both the Emotion and Emotion (Large) modules. When evaluated on our benchmark sets which contain a broad mix of real-world and acted emotional speech data, these new models achieve great accuracy in Dominance prediction with average Concordance Correlation Coefficients (of the devAIce SDK outputs to our human expert labelled gold standard) of up to 0.7.

We encourage customers of devAIce to consider leveraging the dominance dimension whenever a fine-grained distinction between vocal emotion expressions is important. The behavior and accuracy of Arousal and Valence has not considerably changed with the addition of the Dominance dimension.

- Enhanced categorical model in the Emotion (Large) module with improved accuracy for prototypical vocal emotion expressions

The latest devAIce releases come with a refined categorical model in the emotion modules. audEERING has always been focused on delivering best accuracy on natural, everyday vocal emotion expressions. This has caused our models to not always recognize acted, prototypical emotion expressions correctly. However, these kind of emotion expressions are frequently used by people testing a product with speech emotion detection, or by users acting out emotions in their voice such as in video games that use our entertAIn plugins.

With the new update of the “Emotion (Large)” module in version 3.6.1, we have improved accuracy (Unweighted Average Recall – UAR) by up to 18% relative, measured on our combined benchmarks with natural and prototypical emotion expressions. Most improvements are seen on the prototypical data in our benchmarks, up to 20-30% relative, and we don’t make any sacrifices to the accuracy of detecting natural emotion expressions. The accuracy of the “Emotion” module has also been improved by 10% relative, on average.

Performance optimizations

- Major performance improvements in concurrent model execution

The functionality that devAIce provides through different devAIce modules is internally powered by a number of independent machine-learning models. Even when users enable only a single devAIce module, two or more models are potentially running transparently under the hood. For example, the categorical and dimensional output of the Emotion module is implemented by two separate models running in parallel on the same input.

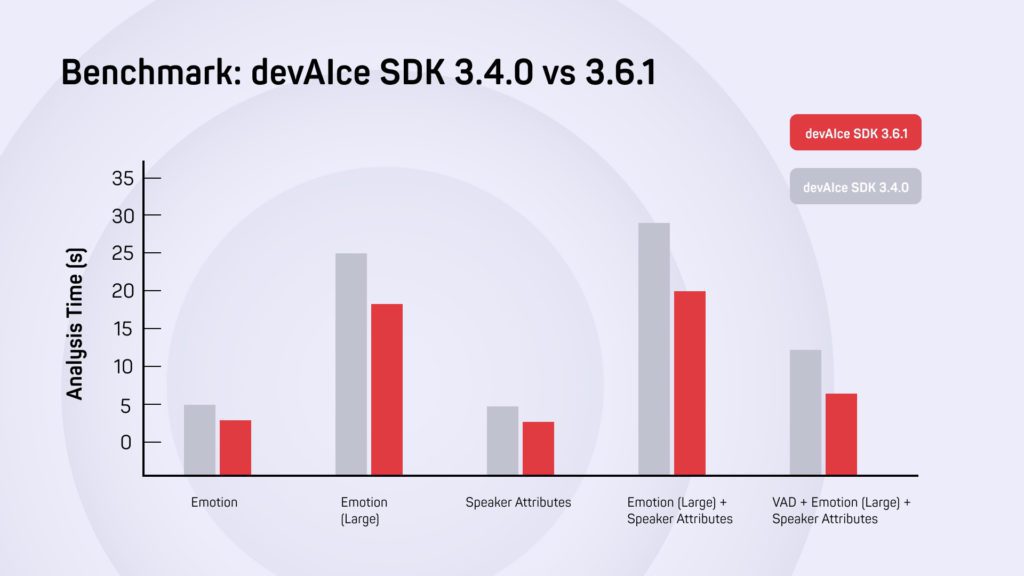

devAIce SDK 3.6.1 comes with significant optimizations in how models are concurrently executed, leading to noticeable speed-ups for many production cases. This reduction in processing overhead is particularly effective when two or more devAIce modules are enabled. Fig. 1 shows the measured difference in analysis time of a sample audio file for different module configurations, comparing devAIce SDK 3.4.0 and devAIce SDK 3.6.1. In this benchmark, the latest version of devAIce SDK is almost 50% faster than previous releases when running the common combination of VAD, Emotion (Large) and Speaker Attributes modules.

- Latency reduction for models run on VAD segments

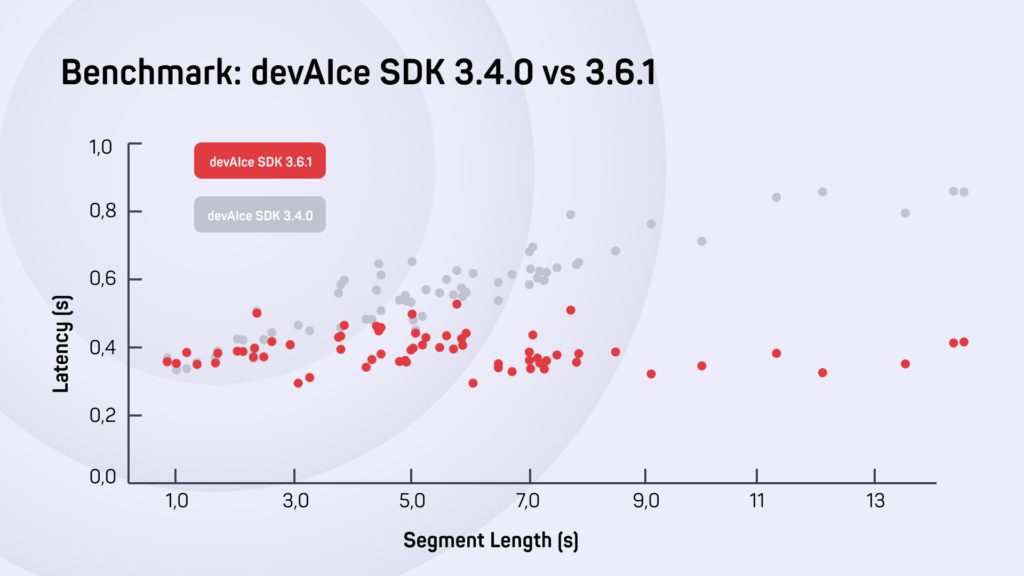

Since the beginning, devAIce has benn offering Voice Activity Detection (VAD) as an optional pre-processing step, to have all subsequent analysis models executed on only detected speech segments. In SDK version 3.4.0 and earlier, model inference would only begin once the end of a speech segment had been detected. In real-time scenarios, this could mean a higher model result latency since the time span when a speaker is speaking could not be used for doing any processing work. Starting with version 3.5.0, devAIce SDK will start to incrementally process ongoing speech segments as soon as possible, leaving less work to be performed after the segment end is detected and thus reducing the time until model results can be returned.

Fig. 2 compares previous and recent releases of devAIce in terms of result latency depending on speech segment length. The plot nicely shows that in pre-3.5.0 version of devAIce, the time necessary to process a speech segment grew linearly with the length of the segment. Starting with version 3.5.0, the processing time stays roughly constant, independent of segment length.

Advanced performance settings

devAIce SDK 3.6.1 introduces two new session settings that can be used to tweak and optimize hardware utilization and performance at runtime:

- processing_mode

This setting accepts one of two values: sequential (default) and parallel. The default provides the best performance on most hardware. However, on certain systems, parallel processing mode can lead to noticeable speed-ups. We recommend to benchmark both options and choose whatever setting provides best performance on your target hardware.

- num_model_threads

This setting controls the number of threads used for neural network inference. By default, the number of threads is optimized based on the host CPU to provide optimal throughput. In certain cases, however, it may be desired to use fewer threads in order to avoid negatively affecting of other real-time-critical applications (e.g. games) running on the system. For these cases, users can now override the thread count using this setting.

Other improvements

Apart from the major changes described above, the recent releases of devAIce bring a number of other smaller improvements and fixes for the API, documentation and the CLI tool. As with every release, you can find a complete changelog of what is new in the SDK documentation of the new releases.

Q&A

Do I have to pay for these updates?

Customers with subscription plans or licenses eligible for free updates do not have to pay for this update.

Do I have to update?

No, you may continue to use whatever version you are currently on. devAIce SDK users need to explicitly opt-in to receive the new version. Nevertheless, we recommend to update to the latest version when possible in order to benefit from the latest model additions, improvements and fixes.

Where can I get more information?

If you are interested in updating to the new version of devAIce or have any questions on these updates or devAIce in general, please contact audEERING’s Customer Experience Manager Sylvia Szylar (sszylar@audeering.com).