The human blood is the basis for a variety of medical applications for diagnosis. But what if our voice can also tell us a lot about the current status of a person’s health? Is the voice the new blood? In order to better recognize the acoustic characteristics of disease patterns that show up in the human voice, we must first understand the human production mechanism of the voice.

The vocal apparatus

Various structures in the abdomen, chest, throat and head must be coordinated when we make a sound: Diaphragm, lungs, trachea and thorax serve as windbox. Vocal support, larynx and glottis as vibrato and tone generator, pharynx, oral cavity and nasal cavity as resonator. Practically, our entire body influences the sound of the voice.

Distinguishing sick from healthy persons based on their voice

All this is subject to a cognitive control process together with other areas of the nervous system. When a cognitive or physical effort, or even a disease, affects this complex process, it can generate features in the language that can be used to distinguish a sick person from a healthy one.

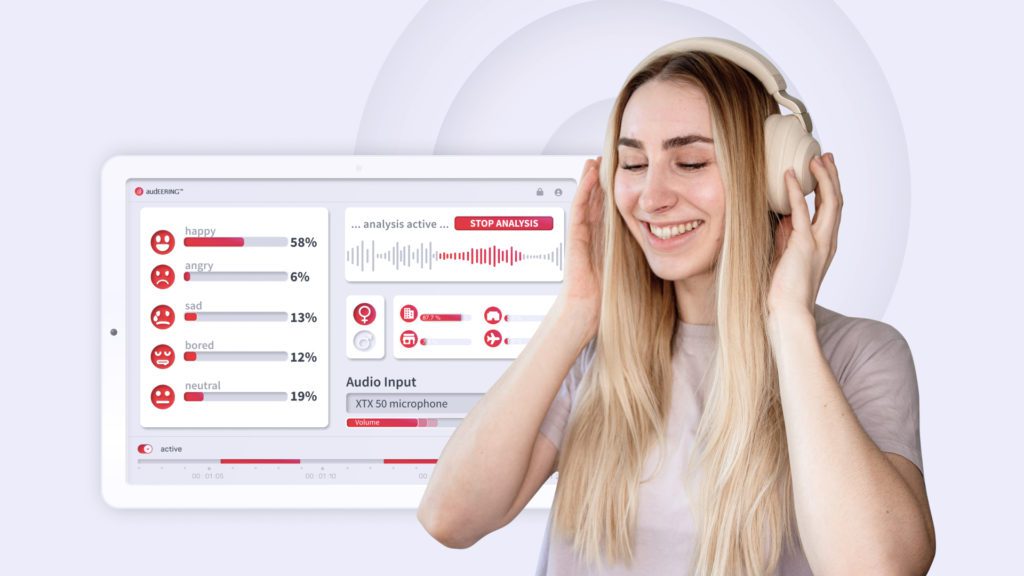

Characteristics concern intonation, intensity and tempo. If AI systems are trained with appropriate sound and speech data, usually consisting of the tuple audio and associated annotation such as diagnosis, they can recognize appropriate learning targets.

Examples for physical and psychological aspects

There are two essential aspects that can be observed: Physical and psychological aspects. The AI can “hear” all these aspects by analyzing the audio signal and learning accordingly based on a lot of data. The sensor needed to record the voice data is the microphone, which is found in almost every computer-based device today like smartphones and tablets.

An example that particularly addresses the physical aspects regarding motor disorders of speech production is Parkinson’s syndrome. In this neurodegenerative disorder, among other things, the muscle groups involved in speech production are impaired. Thus, the person has problems with the pronunciation of words, monotony with regard to intensity and intonation, reduced emphasis and a rough voice quality.

For the mental aspects and psychological side regarding influenced sound and speech production, the recognition of emotions is the perfect example. Emotions play an essential role in disease patterns such as burnout and depression, autism and Alzheimer’s disease. For emotion recognition, voice is considered an increasingly relevant indicator.

Data collection as a basis

A significant influencing factor in AI-based speech analysis is, in particular, the presence of corresponding voice data and the quality. In order to teach the algorithm relevant characteristics, a large number of sound and voice data are required. Both data of healthy persons with high variability including various other clinical pictures and conditions and those of the diseases are needed.

COVID-19 as current example

A current example of the use of intelligent speech analysis and the voice is detecting COVID-19 from speech. Among other things, the virus attacks the respiratory system, which manifests itself in the following symptoms: coughing, a squeezed voice, often pain in the throat and pharynx, shortness of breath or problems with the lungs such as lung rales.

Initial studies show good detection rates for COVID-19. However, a combination of the analysis from speech and sound events is best to make an even more valid statement about COVID-19 symptomatology and to use it as a tool for diagnosis. This could make a significant contribution to pandemic containment, especially in breaking chains of infection and detecting the disease in an early stage.

By loading the video, you agree to YouTube’s privacy policy.

Learn more