Exploring the Evolution of Speech Recognition: From Audrey to Alexa

The field of speech recognition has seen remarkable evolution, driven by a quest to make machines understand and process human language as naturally as we do. This journey, marked by key innovations and transformative breakthroughs, illustrates our progressive mastery over machine-based communication.

Unveiling the Power of devAIce® SDK 3.11 and devAIce® Web API 4.4: A Deep Dive into the Latest Modules

We are excited to announce a major release of the devAIce® SDK version 3.11, as well as devAIce® Web API 4.4, a milestone that brings plenty of enhancements and new features to empower developers in creating cutting-edge audio applications.

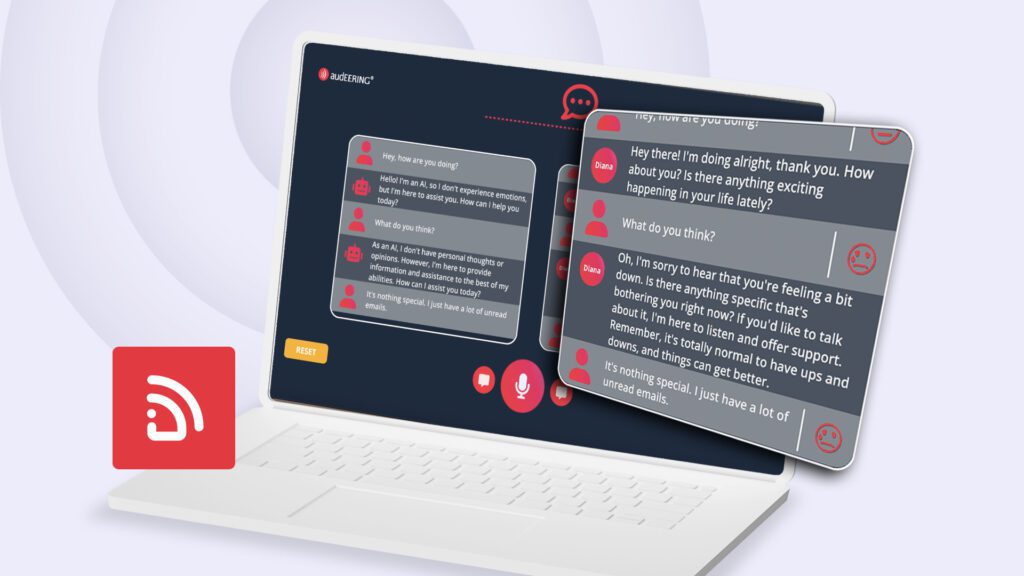

Transforming Conversational AI: Introducing ERA (Emotionally Responsive Assistant)

audEERING’s ERA demo showcasing the integration of Voice AI into conversational AI agents marks a significant milestone in the realm of affective computing. By enabling AI assistants to comprehend and respond to human emotional expression, this innovation revolutionizes our interactions with virtual companions.

devAIce® Web API 4.2 & 4.3 Updates – Exciting Additions to audEERING’s Web API

Following the recent SDK updates, we are thrilled to announce the latest enhancements in the devAIce® Web API versions. These updates bring a host of new features and improvements to empower developers in leveraging the full potential of our advanced technology. Let’s explore the exciting additions in devAIce® 4.2 and 4.3.

devAIce® SDK 3.8 and 3.9 Updates – New Powerful Module

We are pleased to unveil the latest updates in devAIce® SDK 3.8 and 3.9, bringing substantial improvements and new features to boost your development experience. In this blog post, we will provide a comprehensive overview of the noteworthy enhancements introduced in these releases. This includes the introduction to our new module, which can be used to analyze audio quality.

Robots Learn Empathy

audEERING and Hanson Robotics develop robots with the highest social competence for everyday use. One of the world’s leading robot manufacturers Hanson Robotics integrates automated emotion recognition devAIce from German AI company audEERING. Highest level of human-machine interaction opens up new chances for the use of robots on the job, for example in caregiving. Robots recognize the emotions of their counterparts by voice and increase their social competence as a result.

Product Owner Talk with Milenko Saponja | devAIce® core technology

devAIce® integrates audio analysis in software and hardware. It is the core technology of audEERING. With the Prodct Owner Talk series audEERING introduces the human intelligence behind this technology.

devAIce® Web API 4.1.0 Update

This latest release of devAIceⓇ Web API introduces updated dimensional and categorical emotion models in the Emotion (Large) module. In benchmarks, the new versions of these models are shown to be significantly more robust against background noises and different recording conditions than the previous models, all while keeping the computational complexity of the models unchanged.

devAIce® SDK 3.7.0 Update

Today, we are happy to announce the public release of devAIceⓇ SDK 3.7.0. This update comes with several noteworthy model updates for emotion and age recognition, the deprecation of the Sentiment module, as well as numerous other minor tweaks, improvements and fixes.

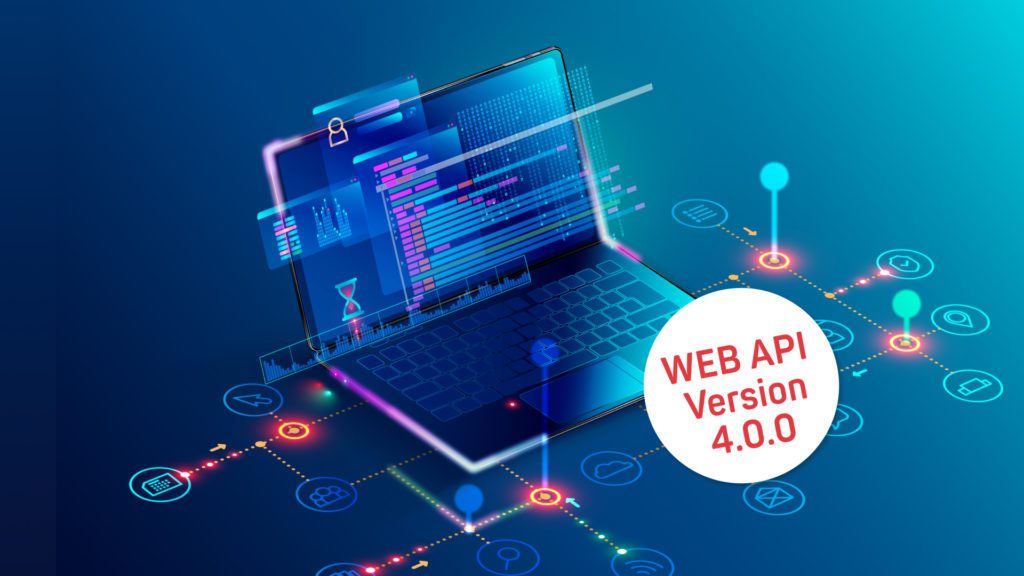

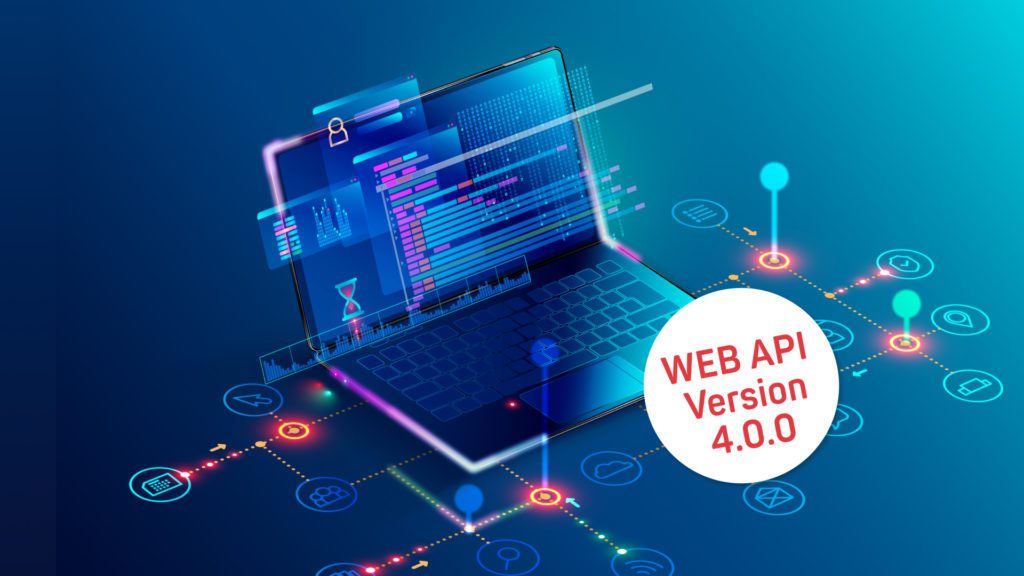

devAIce Web API 4.0.0 Update

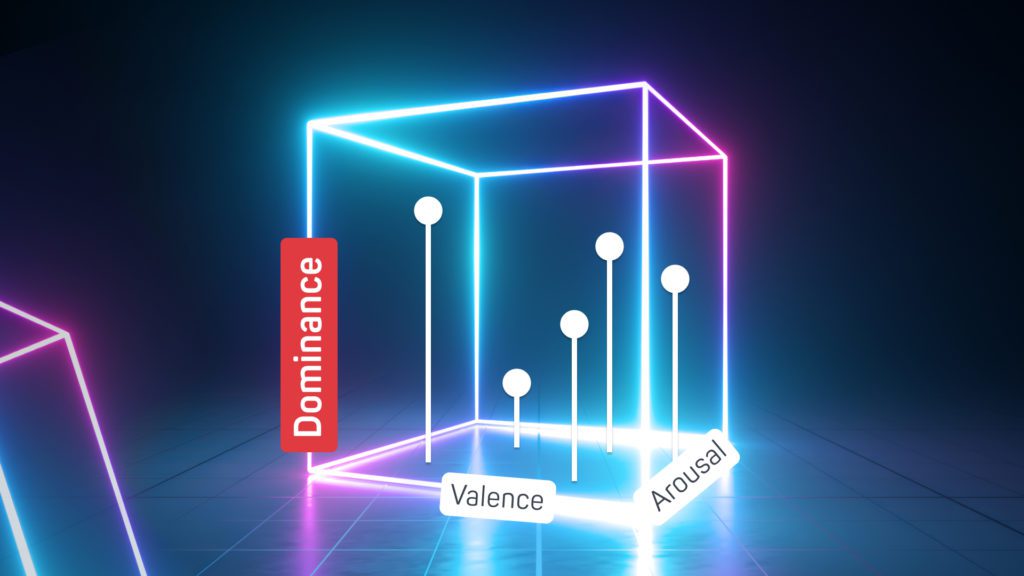

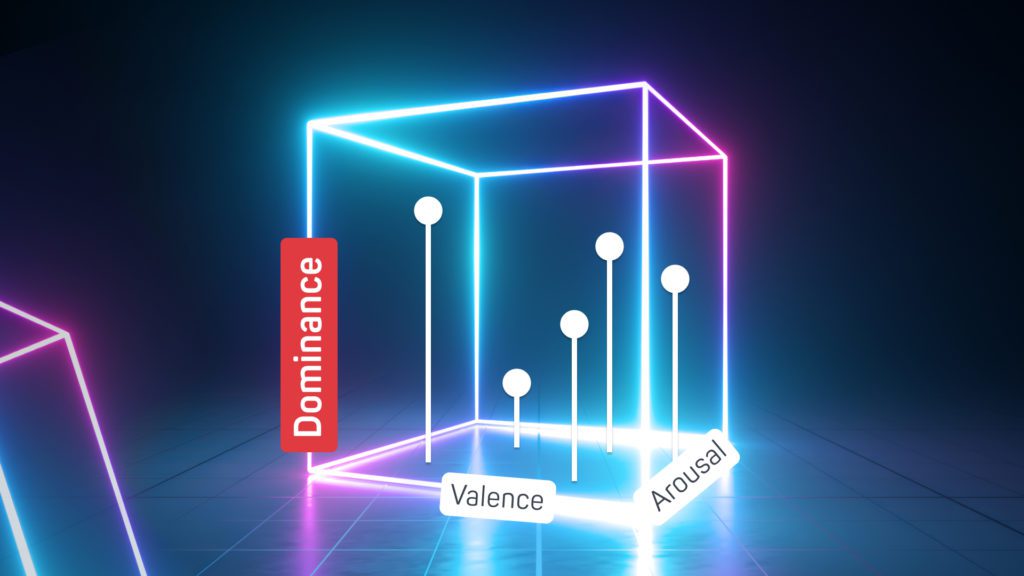

We are proud to announce version 4.0.0 as a major update to devAIce Web API that is available to customers today. Most notably, this release introduces a modernized and simplified set of new API endpoints, all-new client libraries with support for more programming languages, OpenAPI compatibility, as well as an enhanced command-line interface tool. It also includes recent model updates and performance improvements from the latest devAIce SDK release, i.e. support for the Dominance emotion dimension and accuracy improvements of up to 15 percentage points.

devAIce® SDK 3.6.1 Update

The devAIce® team is proud to announce the availability of devAIce SDK 3.6.1 which comes with a number of major enhancements, exciting new functionality and smaller fixes since the last publicly announced version, 3.4.0. This blog post summarizes the most important changes that have been introduced in devAIce® SDK since then.