We are proudly announcing a new class of next-gen emotion models coming to devAIce TM with our latest 3.4.0 release of devAIceTM SDK/Web API.

devAIce TM – audio analysis for hardware and software

devAIceTM is audEERING’s core technology on which every further idea grows. It is designed to recognize emotions, acoustic scenes and many other features from audio. Both in real-time and for any product. The AI models perform solidly even with limited CPU power. audEERING’s core technology can track over 6,000 voice parameters in real time. Our researchers utilize cutting-edge AI methods like deep neural networks and unsupervised learning. We process only high quality data, to optimize our models.

What’s new in devAIce TM

- New family of emotion models

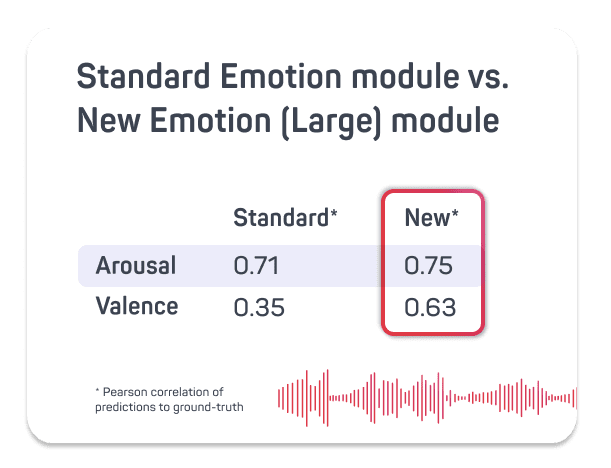

A new class of next-gen emotion models is provided in devAIceTM as part of the newly introduced Emotion (Large) module. By leveraging the latest advances in Deep Learning-based audio classification, these models can significantly outperform our standard Emotion module.

Comparing our standard emotion models with the new models on the MSP-Podcast test set, we are seeing a dramatic increase in valence prediction accuracy and a noticeable boost to arousal estimation:

- Emotion fusion from speech and text

Standalone models for emotion recognition from audio and sentiment analysis from English text have already been available in devAIceTM. With the new release of devAIceTM, we are introducing the new Multi-Modal Emotion module. It offers a dedicated, single model that accepts audio and text as input, and fuses the data into a single combined emotion prediction.

- Speaker Verification

The latest version of devAIceTM introduces support for enrollment-based verification of speakers via the Speaker Verification module. This feature allows you to detect if a speaker matches one or more previously enrolled speakers. One potential use-case of such functionality is user-targeted data collection where speech of only one specific speaker should be processed, and all other voices be ignored.

- Intonation

We are extending the Prosody module with a new metric for analyzing and rating the intonation of human speech. You may use this output to assess how monotonously or lively a person is speaking.

audEERING’s mission & vision

audEERING supports and continues to develop the standard version of these models for low-resource environments. In particular, we are committed to realizing the full potential using the voice as a natural resource to create value for humans and their everyday lives.

Where can I get more information? Do you have questions on the updates of devAIceTM? Would you like to get your hands on the latest version?

For all further information get in contact with our Customer Experience Manager Sylvia Szylar: sszylar@audeering.com