Within ERIK, a German nationally funded research project about robot

interaction with autistic children, audEERING is developing software to

analyse and express emotional speech

Autistic children and adults sometimes have difficulties to express and interpret their own and others emotional arousal. In

the worst case, this can lead to social isolation, in milder cases to many awkward situations based on misunderstandings.

For years, Psychologists from the Humboldt University of Berlin [1] are working with autistic children and interactive technology and have developed games that help these children to train their emotion understanding capabilities [2].

Together with Fraunhofer IIS, the Astrum IT GmbH the University of Erlangen-Nuremberg (FAU) and the Humboldt University, audEERING joined forces in a BMBF funded project [3] that aims for developing a robot platform that can be used in therapy sessions to let children playfully explore and train the world of emotional expression.

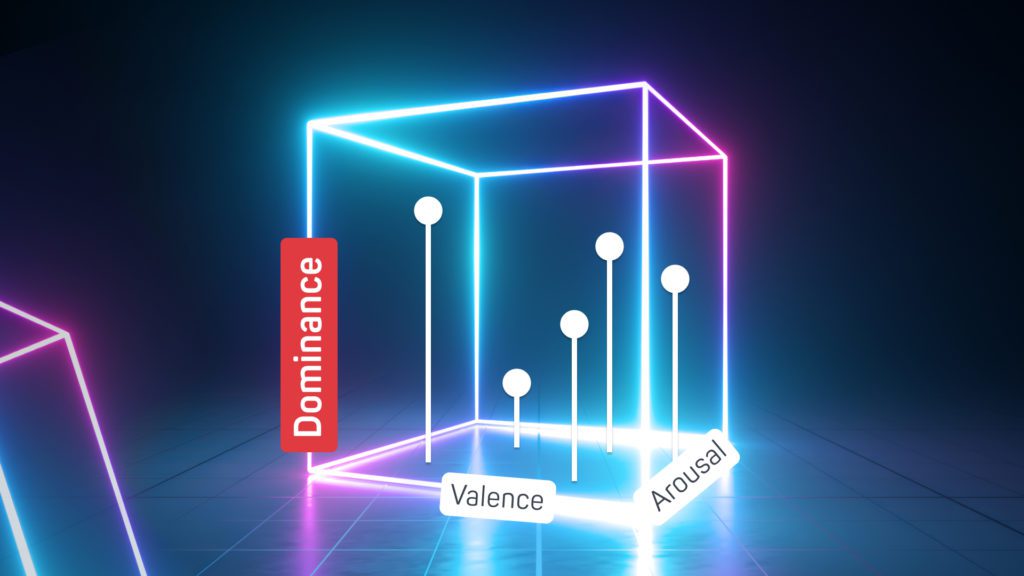

To help children estimating the effect of their vocal expression, audEERING will record a database of non-diagnosed children that can serve as a reference for socially acceptable expressions of arousal and valence. This database will be annotated by audEERING’s iHEARu-PLAY platform and used to train our deep neural net classifiers to detect arousal and valence in speech. Like this, the children can get feedback by the robot ”You might feel happiness now but for others this expression might appear as anger´´. The robot does not have to rely on the speech analysis alone as the audio predictions will be interpreted together with the output of mimic and heart rate detection modules developed by Fraunhofer IIS.

These will be combined initially in a late fusion approach. Late fusion means that the results of each modality (mimics, speech and heart-rate) will be combined at the end to achieve one final result (the combination can still be done by a machine learning approach). This has the advantage that for each modality the classifiers can be trained on an own database. We will also investigate early fusion approaches where the input signals or extracted parameters for each modality will be combined in one single input for a classifier as soon as common databases are available.

Furthermore, audEERING is developing a software component that alters the speech output of the robot to sound more emotionally appealing and enhance naturalness by adding variation to the prosody, i.e. the melody and rhythm of the speech. We hope that the interaction with the robot will thus be more interesting and enhance the believability of the robot as an emotion-competent intelligent entity, although the interaction will firstly be script-based and not rely on AI algorithms.

The project started in Sept. 2018 and will demonstrate first prototypes at the end of 2019.

[1] https://www.psychologie.hu-berlin.de/de/prof/soccog

[2] http://www.zirkus-empathico.de/

[3] https://www.technik-zum-menschen-bringen.de/projekte/eri