Trees were starting to blossom in the spring of 2016, when Oculus Rift was released as a consumer product. You could put it on and simply teleport into the virtual realm. It is enhanced with more features ever since, helping the users to feel even more immersed in the environment.

You can use Touch Bundles to interact with the environment, haptic feedback is advancing every month, and new gadgets are helping us to engage with more senses.

Interaction is key in virtual environments

One of the fundamental factors to achieve higher presence and immersion in virtual environments is interaction. The higher the responsivity of the environment, the higher the feeling of presence in that environment.

To that end, scientists have tried to involve more senses since the advent of virtual reality. However, majority of these efforts have been focused on output from the system and input for the user. Higher quality visual, 3D audio, and Haptic Vests and gloves.

Affective Computing as input to virtual reality

In this article, we want to point out a less explored domain: Affective Computing as Input to Virtual Reality.

Alright, let’s go with easy Grandma-version explanation for non-experts. In other words, we will do the following:

Grandson! Examples please: Ok, ok, sorry Grandma. So, you put this glasses on (he carefully mounts the Oculus Rift on Grandma). Now you can walk in the forest.

She looks around and starts exploring the Avatar-like environment. Some creatures start to stalk her carefully.

She finds out, and looks at them. She gets excited by their cute glowy skin.

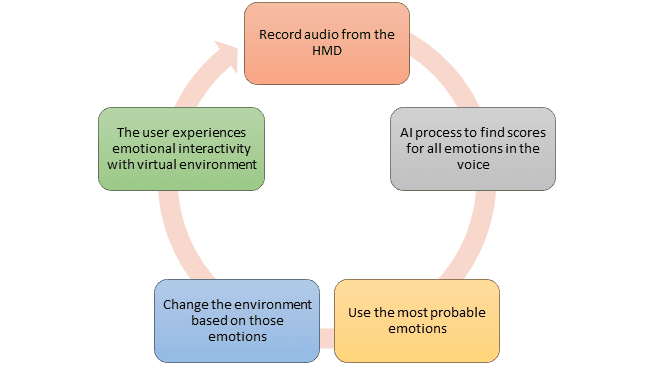

Her excitement is detected through her voice and AI process indicates that the user is experiencing higher level of excitement. This information is being translated into the game.

As little cute glowy creatures find out that she is excited to see them and not frightened or threatening, they start to approach. As they slowly crawl out of the bushes, Grandma gets more thrilled to get close to them. This excitement is positively affecting the feedback loop and finally she gets to touch them, and start petting them as she does pet her own cat which is watching out of the window at the moment.

Emotions are our deepest level of communication

She has never felt so immersed in a virtual environment like this. Why? Not only she was able to see them, but also, she was connecting to them with emotions. The deepest level of connection that we establish.

Minutes pass by and I’m still watching my Grandma sitting in the middle of dining room petting the air around her and saying cute words.

The technical side: what is going on in the background

This level of connection and interaction is powerful enough to thrill not only a Grandma but also any VR-Geek out there. Speaking of geeky, let’s get a bit technical and see what was happening here:

The virtual environment is a game environment built using Unity or any other game engine. The output is simply ported to Oculus Rift so that the user can have first-person visual output. The in-ear noise-cancelling headphones (let’s say Jabra Elite) helps her to hear the ambience of the forest and every little sound that those cute creatures make. They can also record the Grandma’s voice and transmit it to the PC using Bluetooth. The voice is being processed using a Unity plugin for emotion detection. The output of this plugin is a JSON object containing scores and their probability for all emotions that we can experience.

And finally, an if statement is checking and changing the environment based on input emotion.

This is not a dream, nor a science fiction movie. It’s simply the current state of available technologies. It’s totally possible nowadays using audio engineering and machine learning technology, and tools which we have provided to the community.

The voice carries much more than just what we say

We usually forget how much information our voice is carrying. Every sentence we say, and every sound that we produce is the final output of what we are experiencing inside. We can detect emotions and use them for a lot of good purposes.

Connecting through emotion is almost as old as human evolution itself. It is wired inside our brain and it’s not going to change. Extracting voice is non-invasive and natural, and the results and accuracy of the emotion recognition is acceptable enough to be used in consumer products.

Last but not least, if you are a dreamer like us and you have dreamt to be immersed in an environment which is responsive to our emotions, get in touch and let us help you realize this dream.