Your voice has the power to enable natural human-machine interaction.

devAIce®

Empathic machines

Powered by Voice AI

Make voices as important in human-machine interaction as they are in your daily interactions. Enabling machines to behave interactively, naturally, and individually is possible with Voice AI.

This results in personalized settings, deep insights into users’ needs, and empathy as a KPI of digitalization. With Voice AI, the greatest possible added value is extracted from human-machine interaction.

devAIce® is our responsible Audio & Voice AI solution that can be used in numerous use cases:

Software or hardware

Audio analysis for any product

devAIce® analyzes vocal expression, acoustic scenes, and detects many other features from audio. Our AI models perform solidly even with limited CPU power. devAIce® is optimized for low resource consumption. Many models in devAIce® can run in real-time on embedded, low-power ARM devices such as Raspberry Pi and other SoCs.

The VAD module detects the presence of voice in an audio stream. The detection is strongly robust to noise and independent of the volume level of the voice. This means that also faint voices can be detected in the presence of louder background noises.

Detecting voice in large amounts of audio material leads to a resource-saving and efficient analysis process. If VAD runs before the voice analysis itself, large amounts of non-voice data can be filtered and excluded from the analysis.

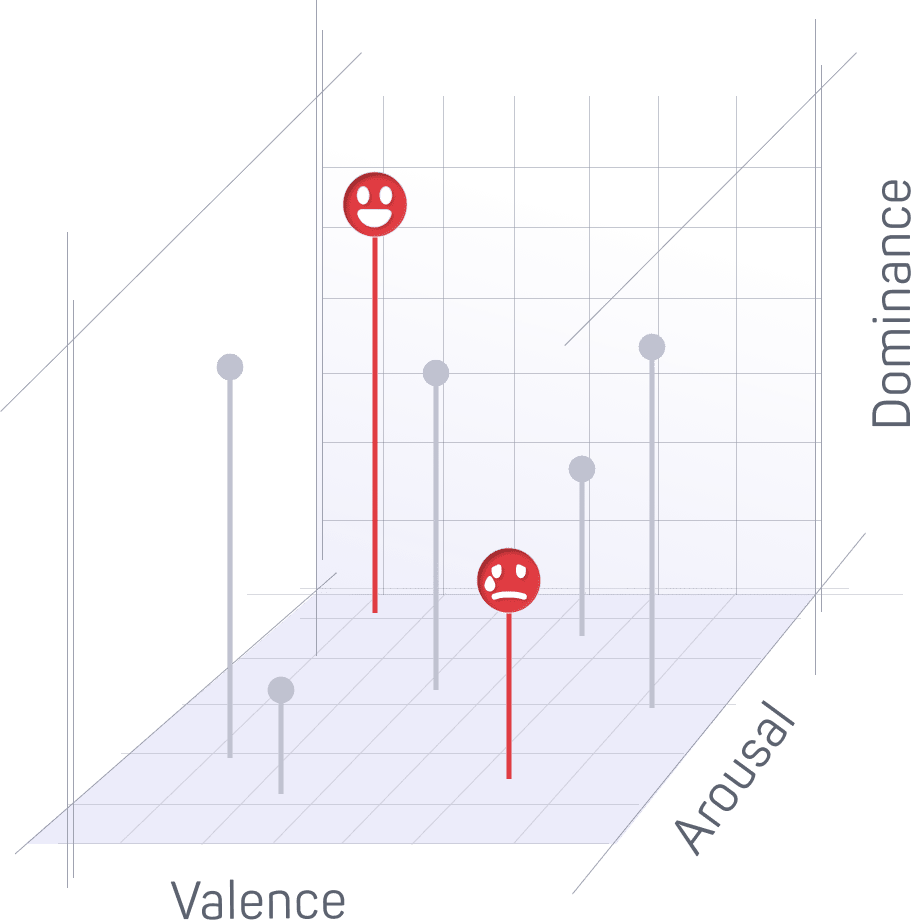

The Expression module analyses the expression of emotions in the voice. The module was developed to analyze expression in all languages. Currently, the module combines two independent expression models with different output representations:

- A dimensional arousal-valence-dominance expression model

- A four-class categorical expression model: happy, angry, sad, and neutral

In devAIce® we offer two Expression modules: Expression & Expression Large.

If working with limited computational resources or running your application on devices with lower memory, the Expression module is a better fit for your needs, compared to the Expression Large module, which has a higher prediction accuracy.

The Multi-Modal Expression module combines acoustics- and linguistics-based expression analysis in a single module.

It achieves higher accuracy than models that are limited to only one of the modalities (e.g. the model provided by the Expression module). Acoustic models tend to perform better at estimating the arousal dimension of expression, while linguistic models excel at predicting valence (positivity/negativity). The Multi-Modal Expression module fuses information from both modalities to improve the prediction accuracy.

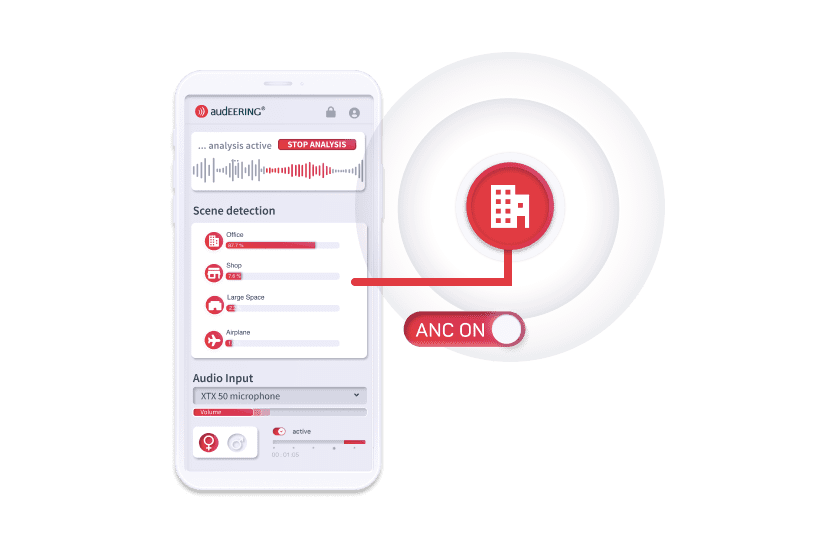

The Acoustic Scene Module distinguishes between 3 classes:

- Indoor

- Outdoor

- Transport

Further subclasses are recognized in each acoustic scene class. This model is currently under development – the specific subclasses will be named in the next update.

The AED module runs acoustic event detection for multiple acoustic event categories on an audio stream.

Currently, speech and music are supported acoustic event categories. The model allows events of different categories to overlap temporally.

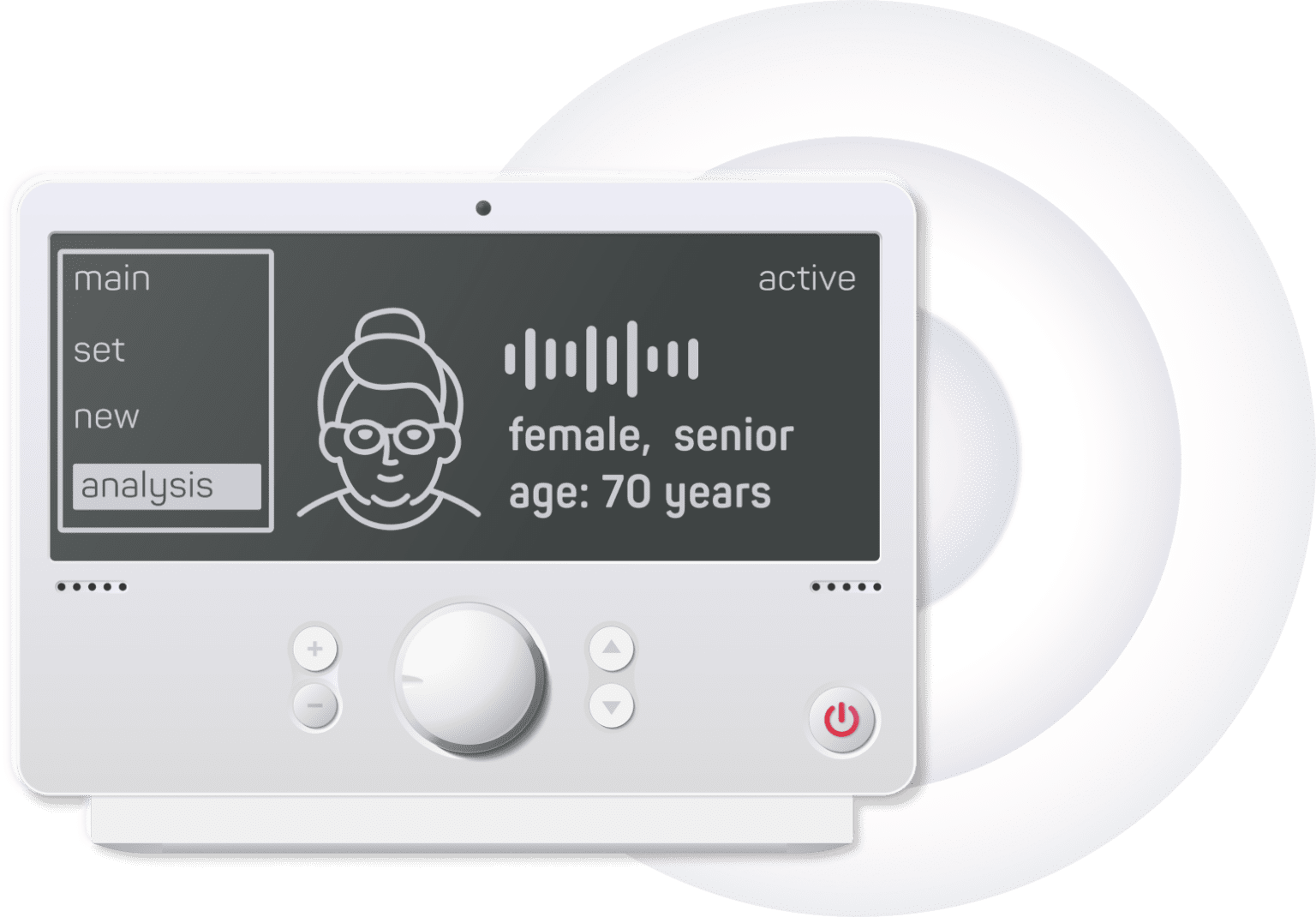

The Speaker Attributes module estimates the personal attributes of speakers from voice and speech. These attributes include:

- A perceived gender (sex), divided into the categories:

- female adult

- male adult

- child

- A perceived age, in years

devAIce® provides two gender submodules called Gender and Gender (Small).

The age-gender models are trained on self-reported gender.

Building on the foundation of the previous Speaker Verification module (Beta version), we have replaced the underlying model with a new one based on the latest deep-learning architectures. That resulted in a notable increase in accuracy, significantly reducing the Equal Error Rate (EER) down to just 4.3%, a state-of-the-art accomplishment.

You can now achieve more robust speaker verification by reliably verifying a single speaker, pushing the boundaries of what was previously possible.

- Enrollment mode: a speaker model for a reference speaker is created or updated based on one or more reference recordings.

- Verification mode: a previously created speaker model is used to estimate how likely the same speaker is present in a given recording.

It’s important to note that, due to the new underlying model, there is an increase in the resource usage and computational cost of the Speaker Verification module.

The Prosody module computes the following prosodic features:

- F0 (in Hz)

- Loudness

- Speaking rate (in syllables per second)

- Intonation

Running Prosody module in combination with VAD:

- If the VAD module is disabled, the full audio input is analyzed as a single utterance, and one prosody result is generated.

- When VAD is enabled, the audio input is first segmented by the VAD before the Prosody module is run on each detected voice segment. In this case, individual prosody results for each segment will be output.

The openSMILE Features module performs feature extraction on speech. Currently, it includes the following two feature sets based on openSMILE:

- ComParE-2016

This feature set consists of a total of 6373 audio features that are constructed by extracting energy, voicing, and spectral low-level descriptors and computing statistical functionals on them such as percentiles, moments, peaks, and temporal features.

While originally designed for the task of speaker emotion recognition, it has been shown to also work well for a wide range of other audio classification tasks.

- GeMAPS+

The GeMAPS+ feature set is a proprietary extension of the GeMAPS feature set described in Eyben et al.2. The set consists of a total of 276 audio features and has been designed as a minimalistic, general-purpose set for common analysis and classification tasks on voice.

Leveraging the powerful whisper.cpp library, this module opens a world of possibilities by allowing developers to transcribe spoken language into written text seamlessly. The additional integration of this module means that resources no longer need to be used to integrate external ASR engines separately and combine them with audio analysis, as devAIce® offers an all-in-one solution.

The Audio Quality module estimates the Signal to Noise Ratio (SNR) and the reverberation time (RT60) of a given audio input. Input signals of any duration are accepted. Every audio input yields one value for each parameter.

Personal expression The tone of voice

Personal expression is transmitted through the voice. In our everyday lives, we automatically perform an auditive analysis when we speak to someone. devAIce® focuses on vocal expression and derives emotional dimensions from voice analysis.

Voice Activity Detection

Voice or background noise?

devAIce® robustly detects presence and absence of voice in real-time with minimal latency.

Speaker Attributes

Who is speaking? devAIce® can give you insights into speaker attributes like age or gender.

Acoustic Scene Detection

Where are you? devAIce® can distinguish 3 acoustic classes – indoor, outdoor, transport. The module analyzes concrete acoustic scenes for each acoustic class.

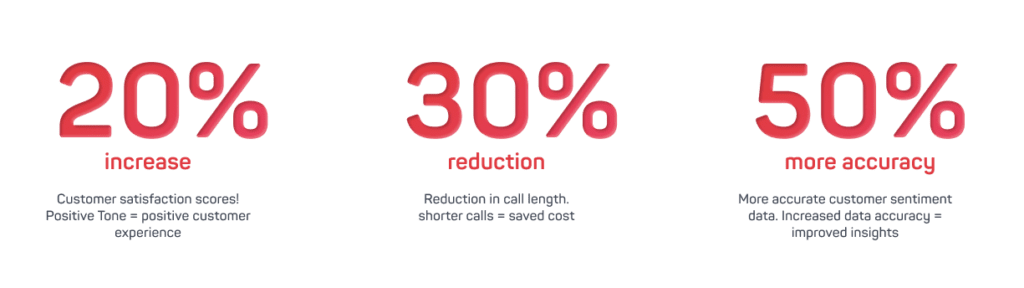

Jabra's Engage AI

Powered by audEERING

Jabra’s Engage AI is the Call Center tool for enhanced conversations between agents and clients. The integration of audEERING’s advanced AI-powered audio analysis technology devAIce® into the call center context provides a new level of agent and customer experience. The tool fits into numerous contexts, always with the goal of improving communication.

Source: https://www.jabra.com/software-and-services/jabra-engage-ai/

MW Research

Voice analysis in market research

“Improve product or communication testing with emotional feedback! Our method analyzes the emotional state of your customers during the evaluation. This gives you a comprehensive insight into the emotional user experience.”

Hanson Robotics

Robots with empathy

The cooperation between Hanson Robotics and audEERING® also seeks to further develop Sophia Hanson’s social skills. In the future, Sophia will recognize the nuances of a conversation and be able to respond empathically as a result.

In nursing and other fields affected by the shortage of skilled workers, such robots equipped with social AI can help in the future.

The Simulation Crew

“Emotions are an essential part of our interactions,” says Eric Jutten, CEO of Dutch company The Simulation Crew.

To ensure that their VR trainer, Iva, was capable of empathy, they found devAIce® XR as a solution. Powered by the XR plugin, they integrated Voice AI into their product. Read the full story.

Get the minutes you need!

devAIce® prepaid plans

PREPAID ENTRY

-

600 minutes

-

Web API

-

BASE Package, incl. one selected module

PREPAID PLUS

-

6,000 minutes

-

Web API

-

BASE Package, incl. one selected module

Packages Overview

BASE

+ Voice Activity Detection

+ Acoustic Event Detection

+ Speaker Verification

+ ASR

+ Audio Quality

+ Features

EXPRESSION

+ Vocal Expression

+ Vocal Expression Large

+ Multi-Modal Expression

+ Prosody

SPEAKER

+ Age

+ Perceived Gender

SCENE

+ 3 Scenes with

8 Sub-Scenes

+ Indoor

+ Outdoor

+ Transport

devAIce® SDK:

lightweight,

native on-device

The devAIce® SDK is available for all major desktop, mobile and embedded platforms. It also performs well on devices with low computational resources, like wearables and hearables.

- Platforms: Windows, Linux, macOS, Android, iOS

- Processor architectures: x86-64, ARMv8

devAIce® Web API: cloud-powered,

native for the web

devAIce® Web API is the easiest way to integrate audio AI into your web- and cloud-based applications. On-premise deployment options for the highest data security requirements are available. Web API accessible:

- via command-line CLI tool

- via included client libraries: Python, .NET, Java, JavaScript, and PHP

- by directly sending HTTP requests

devAIce® XR: the Unity & Unreal plugin

devAIce® XR integrates intelligent audio analysis into virtuality. The plugin is designed to be integrated into your Unity or Unreal project.

Don’t miss the moment to include the most important part of interaction: Empathy.

Product Owner Talk

With Milenko Saponja

By loading the video, you agree to YouTube's privacy policy.

Learn more

Several use cases

For Voice AI technology

Customers, projects &

Success stories

Contact us.

Contact audEERING® now to schedule a demo or discuss how our products and solutions can benefit your organization.