Blog

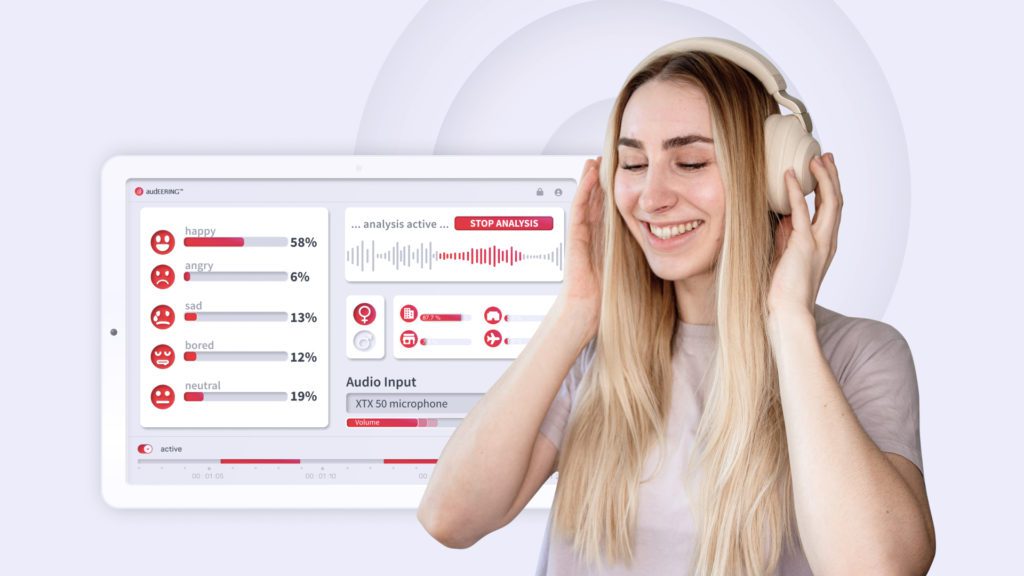

Today, we are happy to announce the public release of devAIceⓇ SDK 3.7.0. This update comes with several noteworthy model updates for emotion and age recognition, the deprecation of the Sentiment module, as well as numerous other minor tweaks, improvements and fixes.

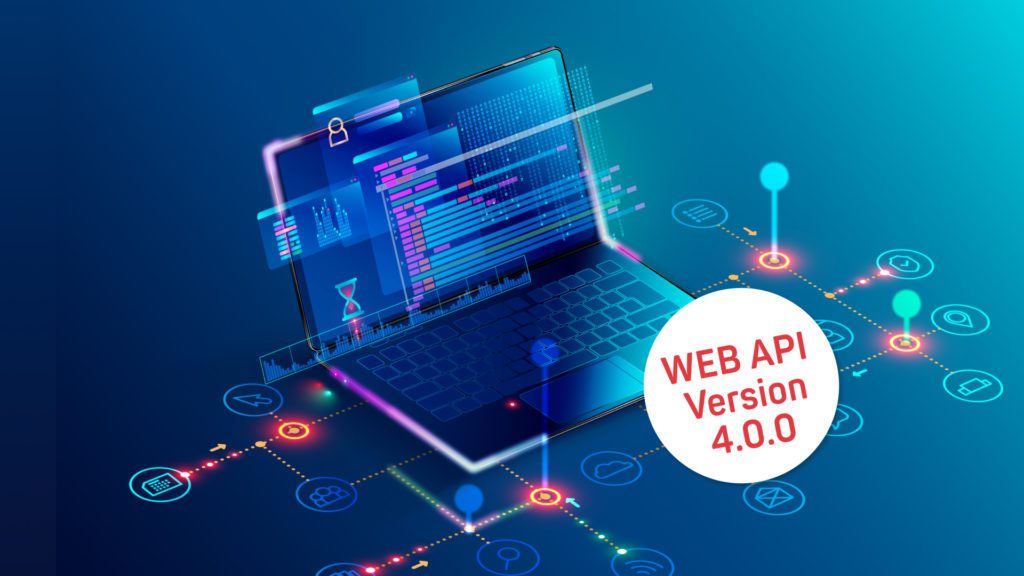

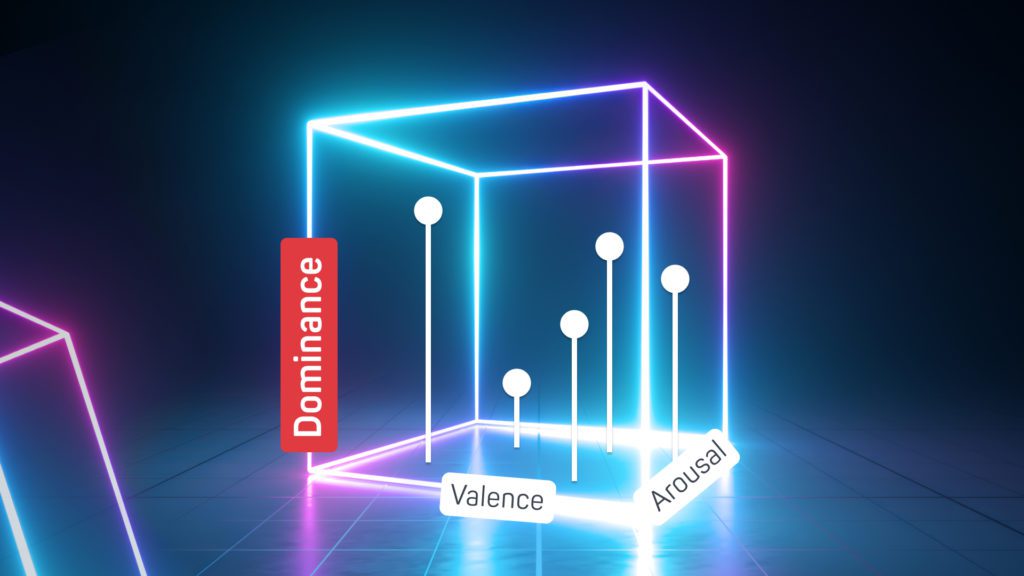

We are proud to announce version 4.0.0 as a major update to devAIce Web API that is available to customers today. Most notably, this release introduces a modernized and simplified set of new API endpoints, all-new client libraries with support for more programming languages, OpenAPI compatibility, as well as an enhanced command-line interface tool. It also includes recent model updates and performance improvements from the latest devAIce SDK release, i.e. support for the Dominance emotion dimension and accuracy improvements of up to 15 percentage points.

The devAIce® team is proud to announce the availability of devAIce SDK 3.6.1 which comes with a number of major enhancements, exciting new functionality and smaller fixes since the last publicly announced version, 3.4.0. This blog post summarizes the most important changes that have been introduced in devAIce® SDK since then.

SHIFT: MetamorphoSis of cultural Heritage Into augmented hypermedia assets For enhanced accessibiliTy and inclusion supports the adoption of digital transformation strategies and the uptake of tools within the creative and cultural industries (CCI), where progress has been lagging.

audEERING is now participating in the SHIFT project with the goal of synthesizing emotional speech descriptions of historical exhibits. This will change the way one experiences a historical monument, especially for visually impaired people.

We use avatars to show our identity or assume other identities, and want to make sure we express ourselves the way we want. The key factor in expression is emotion. Without recognizing emotions, we have no way of modifying a player's avatar to express their expression and individuality.

With entertAIn play, recognizing emotion becomes possible.

Human interaction is based on a language, on a context, on a world knowledge that we share. As a Voice AI company, we know that emotion is the key factor. Emotional expression gets us moving, creates movement and a collective response. It is a key factor in society. It is the basis for all the decisions we make. In creating a virtual reality, new dimensions and augmented experiences, this key factor cannot be missing.

2021 has been an exciting year for our researches working on the recognition of emotions from speech. Benefiting from the recent advances in transformer-based architectures, we have for the first time built models that predict valence with a similar high precision as arousal.

We are proudly announcing a new class of next-gen emotion models coming to devAIce with our latest 3.4.0 release of devAIce TM SDK/Web API.

Developing AI technology as we do at audEERING, we need to understand our human perception. Everyday perception is enabling us to realize the emotional state of our communication partner in different situations. In the process of Human Machine Learning we need to give the algorithm essential input. How do we at audEERING create AI?

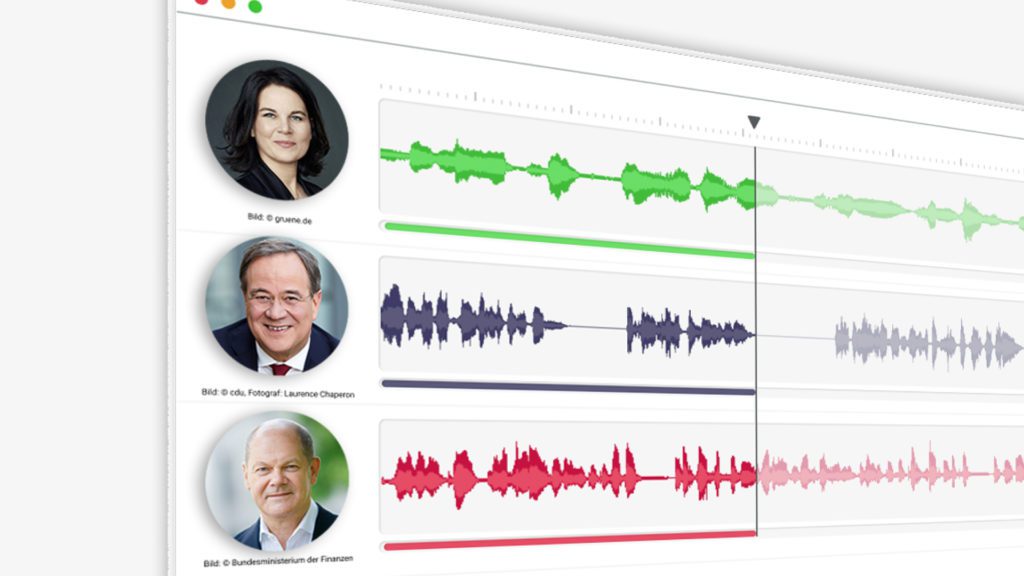

The german politicians Annalena Baerbock, Armin Laschet and Olaf Scholz had been analyzed by audEERING’s Audio AI while their chancellor candidation. audEERING's analyzes do not refer to the content of the speeches, but to acoustic, linguistic and emotional characteristics. These are identified by using scientific procedures and audEERING's award-winning AI technology devAIce ™.

Just like last year, we are proud that we got the chance to present our ideas and be part of the devcom Developer Conference 2021. We talked in a panel discussion about the entertAIn brand. If you missed the talk, watch it on our Youtube Channel.